Empower your employees to embrace the productivity benefits of generative AI and make informed choices to avoid AI risks.

While organizations like Samsung, Verizon, and Apple have made headlines this year with their hard crackdowns on the use of new generative AI tools at work, many are still grappling with the questions of if and how to allow employees to use these novel SaaS tools. Ambiguity remains, and according to a recent Reuters / Ipsos poll, 25% of employees don’t know whether or not their company allows the use of generative AI tools like ChatGPT. Without clear acceptable use policies in place, organizations are putting themselves at risk of potential data privacy and compliance issues.

What’s more alarming is that the same poll found that while 28% of respondents said they regularly use ChatGPT at work, only 22% said their employers explicitly allowed such external tools. Our own research also shows that employees are very willing to work around blocked access to the SaaS tools they want to use for work, further underscoring the need for solutions to guide employees toward safe, compliant generative AI usage.

The first step toward safe use of generative AI tools is to develop and share an acceptable usage policy. Arming employees with information on the dos and don’ts of interacting with generative AI can help them mitigate AI security risks, safeguard sensitive data, avoid ethical and legal pitfalls, and operate with more confidence. The tricky part is making sure the information reaches the employees who need it most, and when they need it most.

We’ve talked before about how Nudge Security can help your organization embrace generative AI tools securely and at scale, including discovering new AI tools as they are introduced and accelerating security reviews of new applications. Now we’re extending that functionality with a new automated playbook to help you equip your employees to use AI tools safely and in compliance with your acceptable use policy.

Nudge Security’s new generative AI playbook discovers the AI tools your employees are using and “nudges” account holders with just-in-time guidance on acceptable use, reaching them right where and when they’re working. With this new functionality, you can:

Want to see it for yourself? Take a peek at our interactive tour:

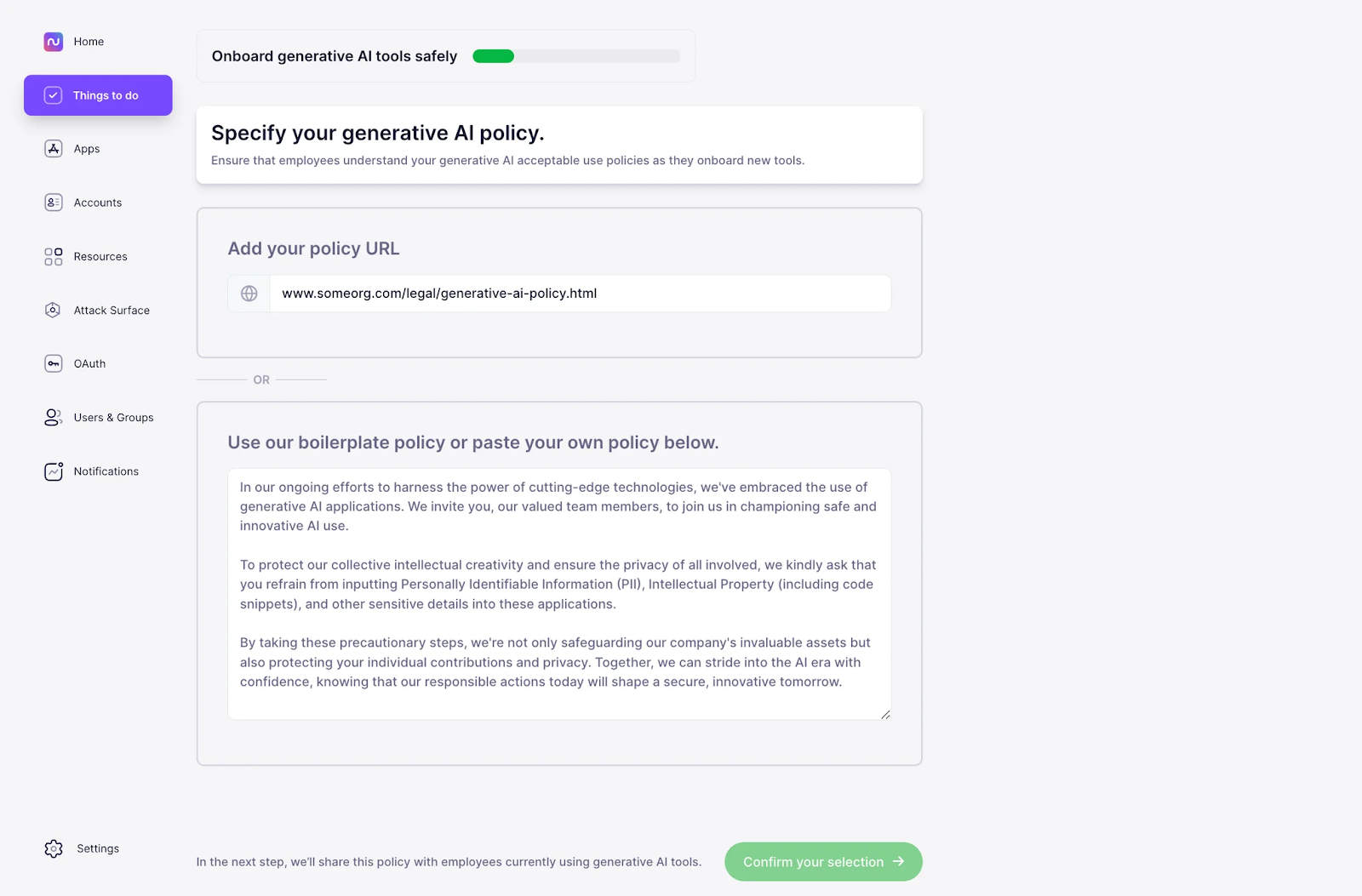

To get started, identify your organization’s policy for using generative AI. If you haven’t crafted one yet, you can use our template as a starting point. In the steps that follow, this policy will be shared with each employee who has created an AI tool account.

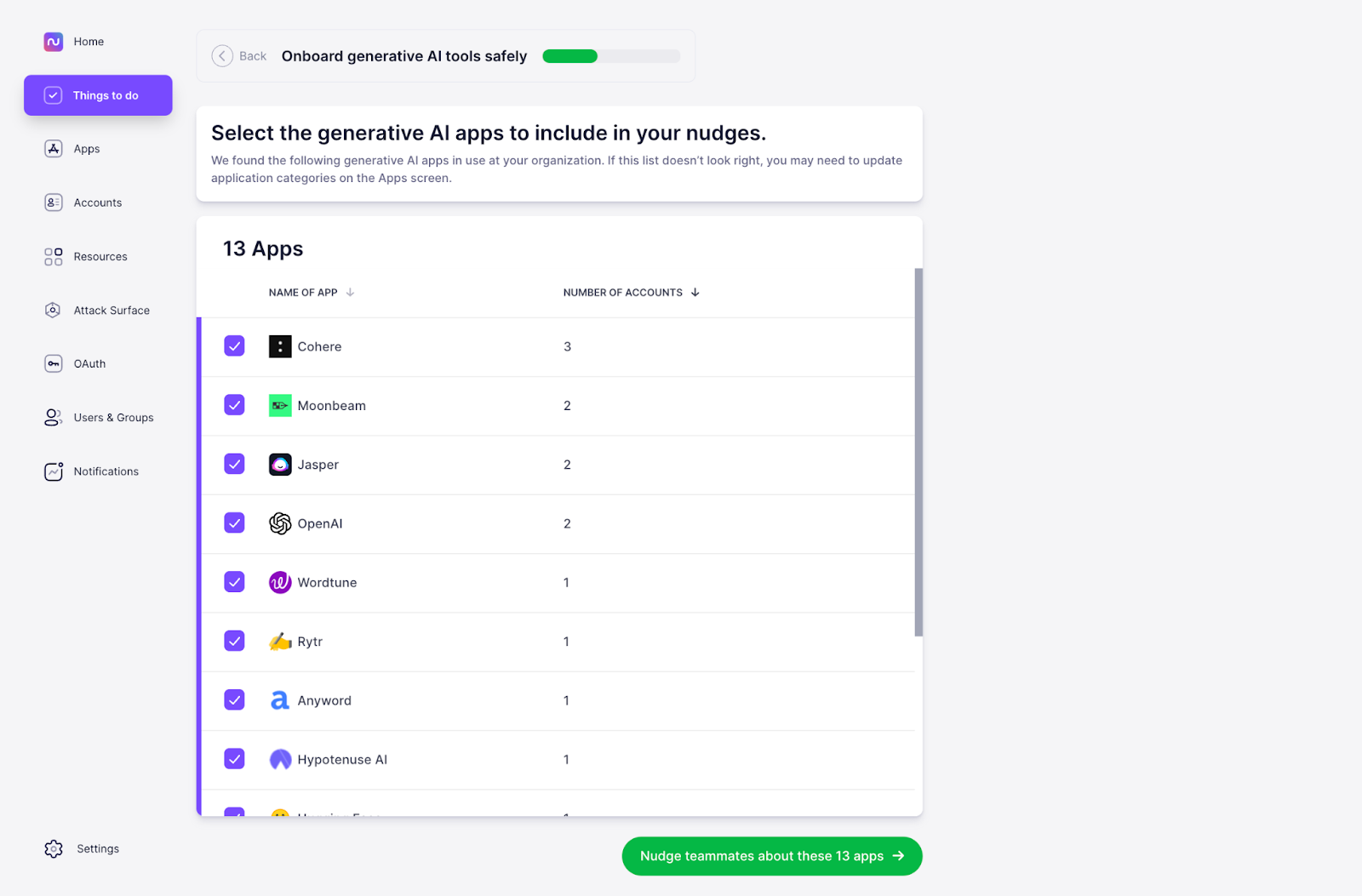

Nudge Security identifies all the generative AI applications your employees are using. You can choose whether to nudge users of all these apps with your acceptable usage policy, or just some.

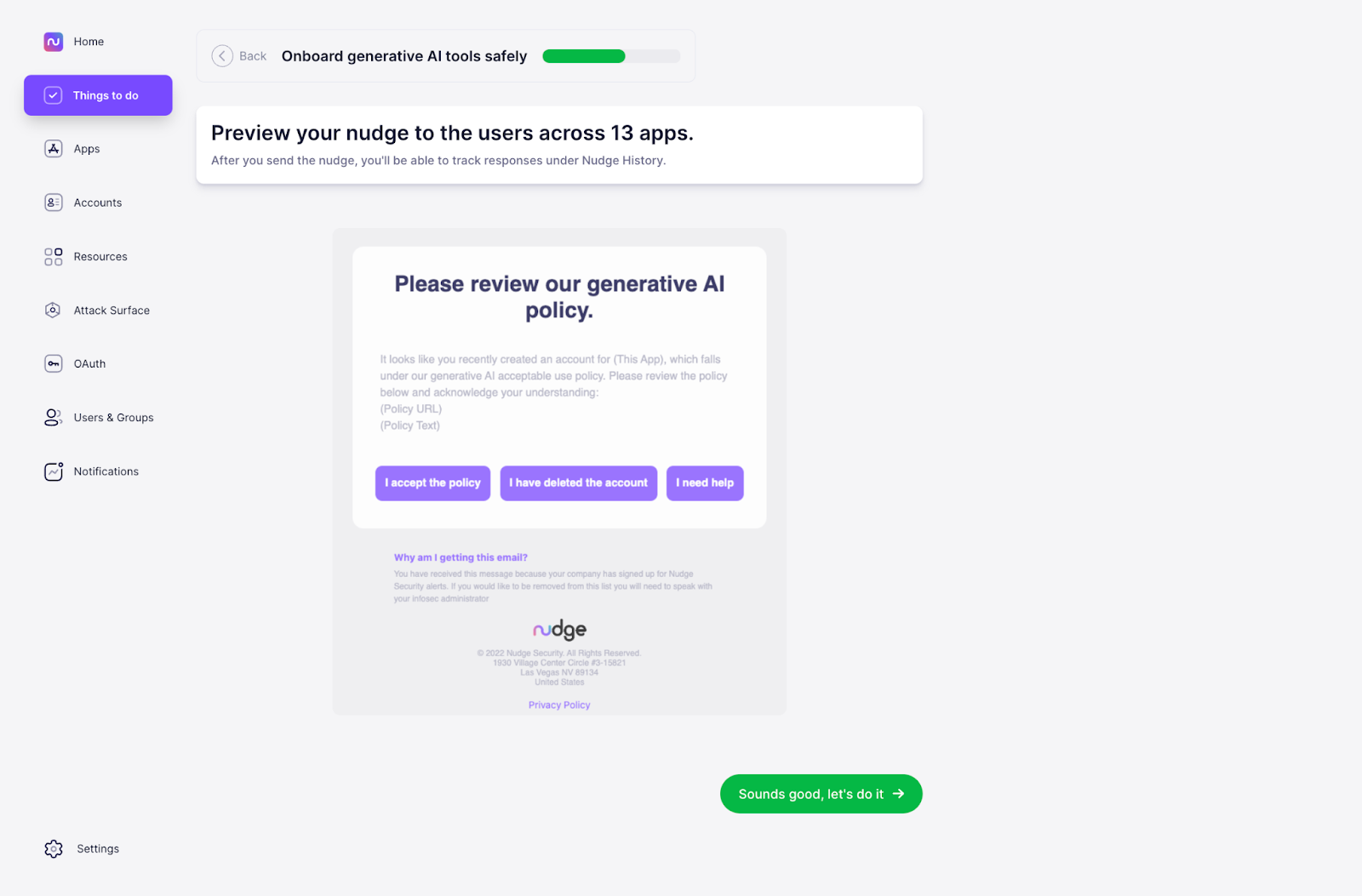

Each employee who holds an account for one of the apps you identified in the last step will receive a nudge containing your organization’s acceptable use policy. Whether they receive the nudge via email or Slack, users will have the option to accept the policy, tell you they've deleted the account, or ask for help. You can track their responses under Nudge History.

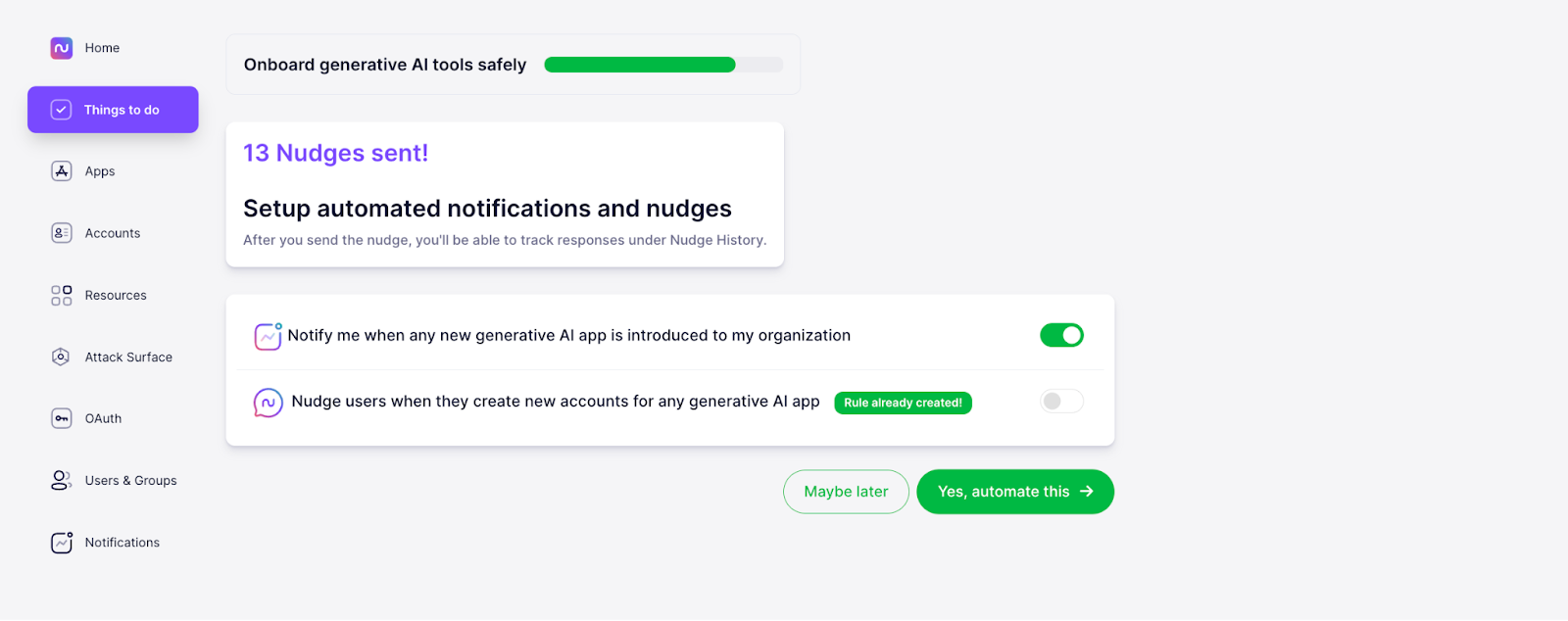

Now that you’ve nudged current users, it’s time to set up rules to help you operationalize your policy going forward. You can set up automated nudges that go out to users as soon as they sign up for new AI accounts, making sure they receive your acceptable usage policy exactly when it’s relevant to them.

You can also set up notifications to be alerted any time one of your employees adopts a new generative AI application, enabling you to establish a repeatable process for reviewing and approving new tools. Within each application’s Overview page, Nudge Security provides security context to help inform and accelerate your reviews.

Interested in learning more about implementing generative AI tools securely and at scale?