While the convenience of integration can boost productivity, the hidden dangers and cybersecurity risks can be significant.

This article was updated on Sept 29, 2025.

Since launching ChatGPT in 2022, OpenAI has defied expectations with a steady stream of product announcements and enhancements. One such announcement came on May 16, 2024, and for most consumers, it probably felt innocuous. Titled “Improvements to data analysis in ChatGPT,” the post outlines how users can add files directly from Google Drive and Microsoft OneDrive. Pretty great, right? Maybe.

Yes, tools like ChatGPT are transforming the way businesses operate, offering unparalleled efficiency and productivity. But while the promise of convenience is significant, it also brings a host of potential security risks: connectors like these inevitably lead to a wider attack surface, as these applications often require access to environments where highly sensitive personal data and organizational data is held.

When you connect ChatGPT to your cloud-based storage service, you can add them to your prompts directly, rather than downloading files to your desktop and then uploading them to ChatGPT. ChatGPT’s integration with Google Drive and Microsoft OneDrive allows users to access and manipulate documents, spreadsheets, and other files directly through conversational AI. Of course, this can streamline workflows, enhance collaboration, and reduce the time spent on routine tasks.

When you connect your organization’s Google Drive or OneDrive account, you grant ChatGPT extensive permissions for not only your personal files, but resources across your entire shared drive. As you might imagine, the benefits of this kind of extensive integration come with an array of cybersecurity challenges. Is it safe to connect ChatGPT to Google Drive/Microsoft OneDrive?

When ChatGPT accesses sensitive data stored in Google Drive or OneDrive, it raises serious privacy concerns. This data might include personal information, confidential business documents, or proprietary research. Any breach or misuse of this information could lead to severe consequences, including identity theft, corporate espionage, and legal liabilities.

Integrating ChatGPT with cloud storage services necessitates granting access permissions. If these permissions are not managed correctly, it could lead to unauthorized access. Hackers could exploit vulnerabilities in the integration process to gain access to sensitive data. Once they have access, they could manipulate, steal, or delete critical files, causing significant disruption to business operations.

AI-driven integrations could become a target for phishing and social engineering attacks. Cybercriminals might attempt to trick users into granting access to their cloud storage or to execute malicious commands through ChatGPT. Given the conversational nature of ChatGPT, users might not always recognize the subtle manipulations used by attackers.

AI systems like ChatGPT learn from the data they process. If not adequately secured, there is a risk of data leakage where sensitive information could inadvertently be shared or exposed. This is particularly concerning in industries like healthcare, finance, and legal services, where confidentiality is paramount.

Integrating ChatGPT with cloud services must comply with various regulatory standards, such as GDPR, HIPAA, and CCPA. Ensuring compliance is challenging but crucial. Any mishandling of data can lead to hefty fines and damage to a company’s reputation.

In Google Workspace, there are a couple ways to identify and investigate activity associated with the ChatGPT connection.

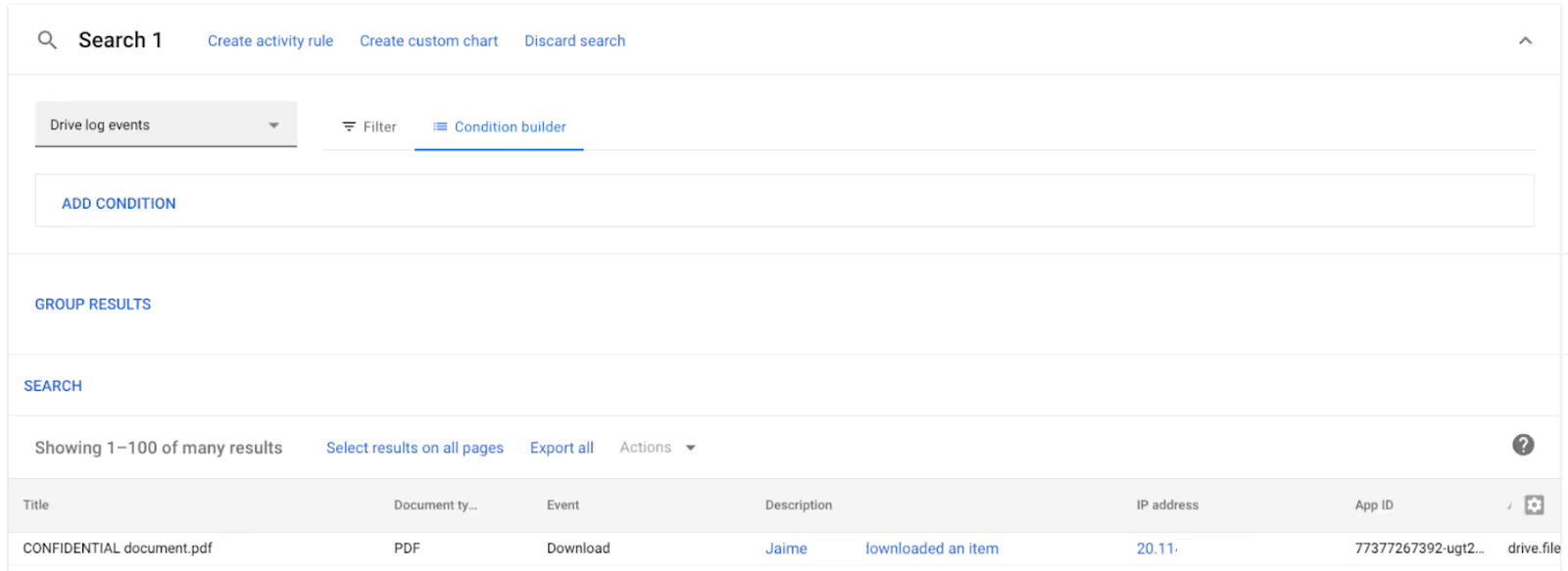

From Google Workspace’s Admin Console, navigate to Reporting > Audit and investigation > Drive log events. Here you’ll see a list of Google Drive resources accessed.

You can also investigate the activity via API calls under Reporting→Audit and investigation→ Oauth log events.

It’s important to note that ChatGPT now integrates with a full suite of apps: Gmail, Google Calendar, GitHub, Dropbox, SharePoint, and Box…which only widens the attack surface.

Plus, recent research presented at Black Hat 2025 revealed a disturbing new risk tied to ChatGPT’s file integrations. Security researchers demonstrated an exploit called AgentFlayer, where a single malicious Google Drive or OneDrive document can stealthily compromise an organization’s data.

Here’s how it works: attackers embed hidden prompt-injection instructions into a document — often using tiny white text that’s invisible to the human eye. When ChatGPT processes the file through its connector, those invisible instructions get executed. Without any clicks or user interaction, ChatGPT can be tricked into leaking sensitive data like API keys, corporate credentials, or internal documents through a crafted output link.

What makes AgentFlayer so dangerous is that it’s a zero-click attack. Unlike traditional phishing, employees don’t have to open a suspicious link or download a shady file. Simply connecting ChatGPT to a poisoned Drive or OneDrive document is enough to trigger the breach.

OpenAI has already rolled out mitigations, but this research highlights an important lesson: when AI systems gain direct access to enterprise data sources, the attack surface expands in ways we’ve never seen before.

For security teams, this means that continuous monitoring of OAuth integrations cannot be put off.

You need visibility into:

This is exactly the kind of visibility and control that platforms like Nudge Security are designed to provide—to make sure that even when new threats emerge, you can act fast and keep your SaaS environment safe.

Nudge Security surfaces your entire organization’s OAuth grants, such as those granted to ChatGPT, within a filterable OAuth dashboard that includes grant type (sign-in or integration), activity, and risk insights. Filter by category to see all grants associated with AI tools:

To drill down into the grant, click to open a detail screen, where you can review a risk profile, access details, scopes granted, and more:

If you’d like to either nudge the creator of the grant to take a certain action, or immediately revoke the grant, users can utilize the icons at the top of the page to do so.

Finally, you can set up a custom rule to ensure that you are notified when a user at your organization creates an OAuth grant for ChatGPT—or any other app for that matter.

While the integration of ChatGPT with Google Drive and Microsoft OneDrive offers immense potential, it also opens the door to significant security risks. Organizations must approach these integrations with a clear understanding of the potential threats and implement comprehensive security measures to mitigate them.

Nudge Security provides the visibility as well as the toolset that allow businesses to harness the benefits of AI-driven productivity without compromising on data security. Start a free, 14-day trial for a close look at your org’s OAuth grants.